Industries

Consumer Goods

Distribution &

Logistics

Logistics

Energy &

Utilities

Utilities

Equity Firms &

Portcos

Portcos

Fintech &

Financial Services

Financial Services

Healthcare &

Life Sciences

Life Sciences

Manufacturing &

Facilites

Facilites

Non-Profits &

Social Impact

Social Impact

Professional Services

Public Sector & Education

Real Estate & Mortgage

Retail &

Commerce

Commerce

SaaS &

Cloud Software

Cloud Software

Services &

Home Improvement

Home Improvement

Telecom &

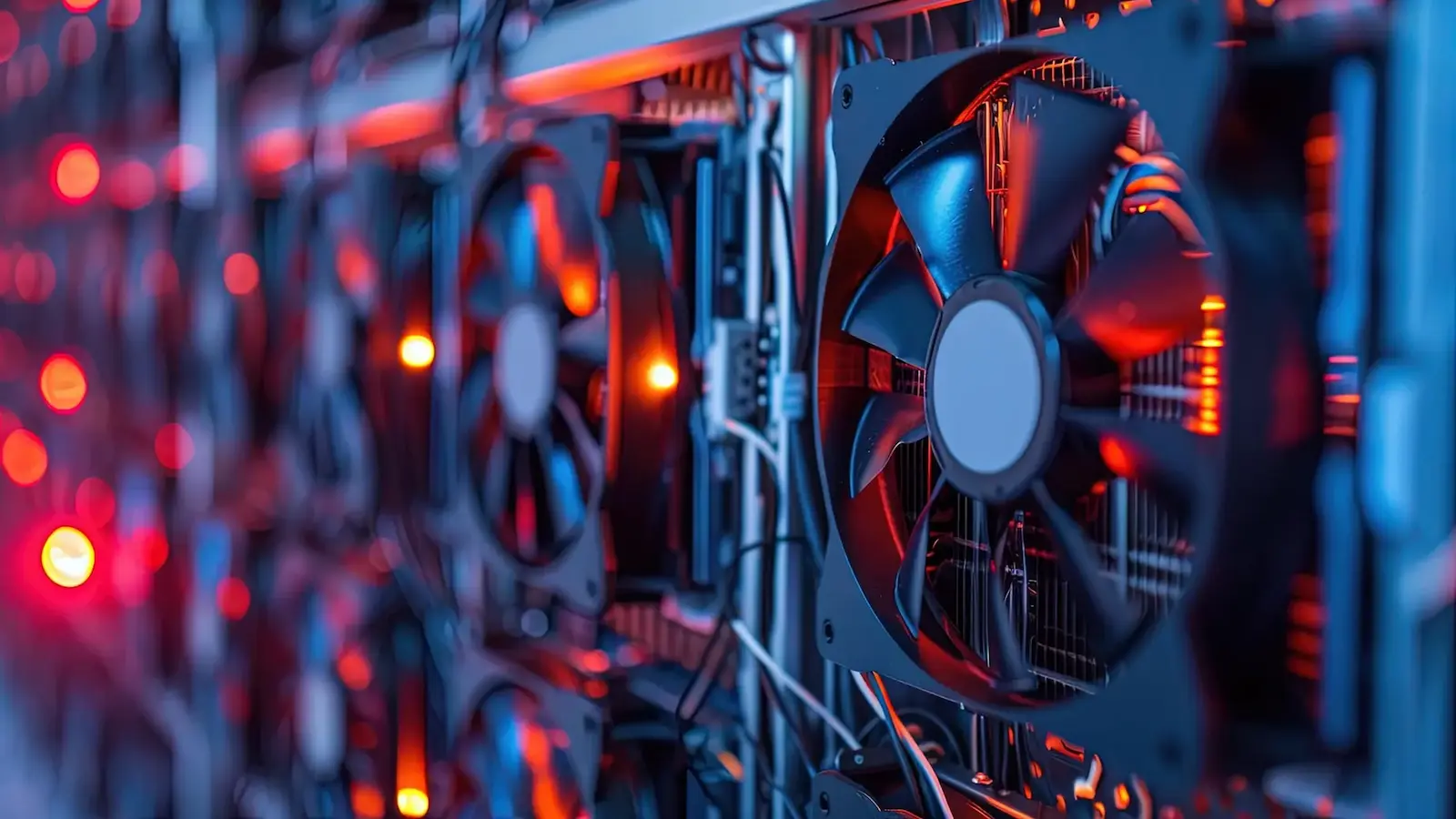

Enterprise Hardware

Enterprise Hardware

Travel, Hospitality & Entertainment

Services

Insights

About Us

Industries

Industries

Consumer GoodsDistribution & LogisticsEnergy & UtilitiesEquity Firms & PortcosFintech & Financial ServicesHealthcare & Life SciencesManufacturing & FacilitiesNon-Profits & Social ImpactIndustries Overview >>>Professional ServicesPublic Sector & EducationReal Estate & MortgageRetail & CommerceSaaS & Cloud ServicesServices & Home ImprovementTelecom & Enterprise HardwareTravel, Hospitality, & Entertainment

Services

Services